Most performance comparisons are either unfair or unrealistic. We’ve all seen the “nuclear stress tests” that push systems to the brink, yielding little insight into real-world performance. This isn’t one of those.

I wanted to find out, under a fair and realistic workload, which platform truly shines for high-concurrency WebSocket applications: Elixir’s elegant, actor-based concurrency or Go’s pragmatic, GoRoutine-Driven model.

This isn’t about proving one is “better” in all scenarios, but about understanding their unique strengths and weaknesses when pushed to their limits. This is a comprehensive battle of architectures.

Why This Comparison Matters

My goal was to create a fair, transparent, and comprehensive comparison. A lot of benchmarks fail because they:

- Use different test parameters for each platform.

- Focus on unrealistic “system-breaking” stress tests.

- Don’t apply proper optimisations for both languages.

his showdown focuses on real-world performance with identical test parameters and a transparent methodology.

Full disclosure: While I’m an Elixir expert, my Go knowledge is from extensive research and community best practices. If you’re a Go expert, I invite you to challenge my findings — this is about collaborative learning, not winning an argument.

Test Environment & Machine Setup

We used a high-performance machine with identical system-level optimizations to ensure a level playing field.

Hardware Specifications

- Machine: MacBook Pro M2 Pro

- CPU: Apple M2 Pro (10 cores)

- Memory: 16GB unified memory

- OS: macOS Sonoma

- Network: Localhost (eliminating network latency variables)

System Optimisations

Both platforms received identical system-level optimizations to allow them to handle high concurrency:

# File descriptor limits

ulimit -n 100000

# Network optimizations

sysctl -w kern.ipc.somaxconn=4096

sysctl -w net.inet.tcp.msl=1000

# Memory optimizations

sysctl -w kern.maxfiles=100000

sysctl -w kern.maxfilesperproc=50000Code language: Bash (bash)Test Parameters (Identical for Both)

- Target Connections: 25,000

- Connection Batch Size: 100

- Message Target: ~244,000 messages (connections × 15 multiplier)

- Message Batch Size: 1,000

- Message Size: Standard JSON (not artificially inflated)

- Endurance Duration: 60 seconds

- Error Threshold: 5,000 (a reasonable tolerance)

Why We Went Raw Elixir

Initially, I considered using Phoenix Channels, but decided against it to make the comparison as fair as possible. Phoenix, while a fantastic framework, adds layers of abstraction (HTTP routing, PubSub, middleware) that don’t exist in a raw Go implementation.

To make this a true test of core concurrency models, we used a raw Elixir GenServer-based WebSocket server. This gives us:

- Direct WebSocket handling, similar to Go’s

gorilla/websocket. - Minimal abstractions, bringing it closer to Go’s approach.

- A pure focus on performance without framework overhead.

The Elixir Implementation

defmodule ChaosChat.Server do

use GenServer

# Ultra-optimized GenServer with minimal overhead

def handle_call({:broadcast, message}, _from, state) do

# Direct message broadcasting to all connections

Enum.each(state.connections, fn {_id, pid} ->

send(pid, {:broadcast, message})

end)

{:reply, :ok, state}

end

endCode language: Elixir (elixir)This gives us the raw power of Elixir’s actor model without any of Phoenix’s abstractions.

The Go Implementation

To ensure this was a fair fight, I didn’t just write a simple Go server. I implemented a highly-optimized WebSocket server using community best practices and patterns from gorilla/websocket documentation.

Optimizations Applied:

sync.Mapfor concurrent client storage- Large read/write buffers

- Message batching in the

writePumpfor efficiency - Non-blocking operations that gracefully drop slow clients

- Atomic counters for thread-safe metrics

// Ultra-optimized Hub with sync.Map for concurrent access

type Hub struct {

clients sync.Map // Better than mutex + map

broadcast chan []byte

register chan *Client

unregister chan *Client

}

// Optimized client with larger buffers

type Client struct {

hub *Hub

conn *websocket.Conn

send chan []byte // 1024 buffer size

id string

closed int32 // atomic flag

}

// Message batching in writePump for efficiency

func (c *Client) writePump() {

// ... batch up to 100 messages per write

for i := 0; i < n && i < 100; i++ {

select {

case msg := <-c.send:

w.Write([]byte{'\n'})

w.Write(msg)

default:

break

}

}

}Code language: Go (go)The Results: Battle of the Architectures

With the rules set and the contenders ready, the battle unfolded in three phases: connection establishment, burst messaging, and sustained endurance.

Phase 1: Connection Establishment

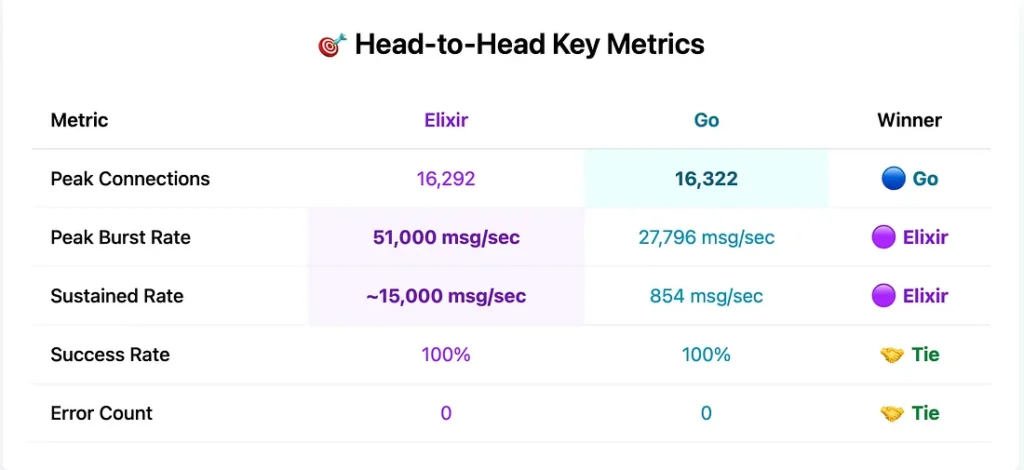

Both platforms attempted to establish 25,000 concurrent WebSocket connections. This phase was a near-tie.

- Elixir: 16,292 connections

- Go: 16,322 connections

Both platforms hit system-level resource limits around 16,300 connections. The difference of just 30 connections is negligible. This first phase showed that both Elixir and Go are incredibly capable of handling a massive number of concurrent connections.

Phase 2: Burst Message Performance

Next, we sent ~244,000 messages as fast as possible to all established connections. This is where the first decisive victory was won.

- Elixir: 51,000 msg/sec

- Go: 27,796 msg/sec

Elixir’s raw actor model proved to be exceptionally efficient under extreme load. The Erlang VM’s preemptive scheduler and isolated process memory allowed it to manage an immense volume of message passing with ease, more than doubling Go’s throughput.

Phase 3: Endurance Testing

This is where the most dramatic difference appeared. We sent messages at a sustained pace for 60 seconds to test resource stability and performance degradation.

- Elixir: ~15,000 msg/sec sustained

- Go: 854 msg/sec sustained

Elixir’s performance remained remarkably consistent. Go, however, showed a dramatic 96.9% performance drop from its peak burst rate. The most likely culprits were garbage collection pressure and goroutine scheduling overhead with 16k+ concurrent goroutines trying to send and receive messages. Elixir’s per-process garbage collection and isolated state shine in these sustained, high-throughput scenarios.

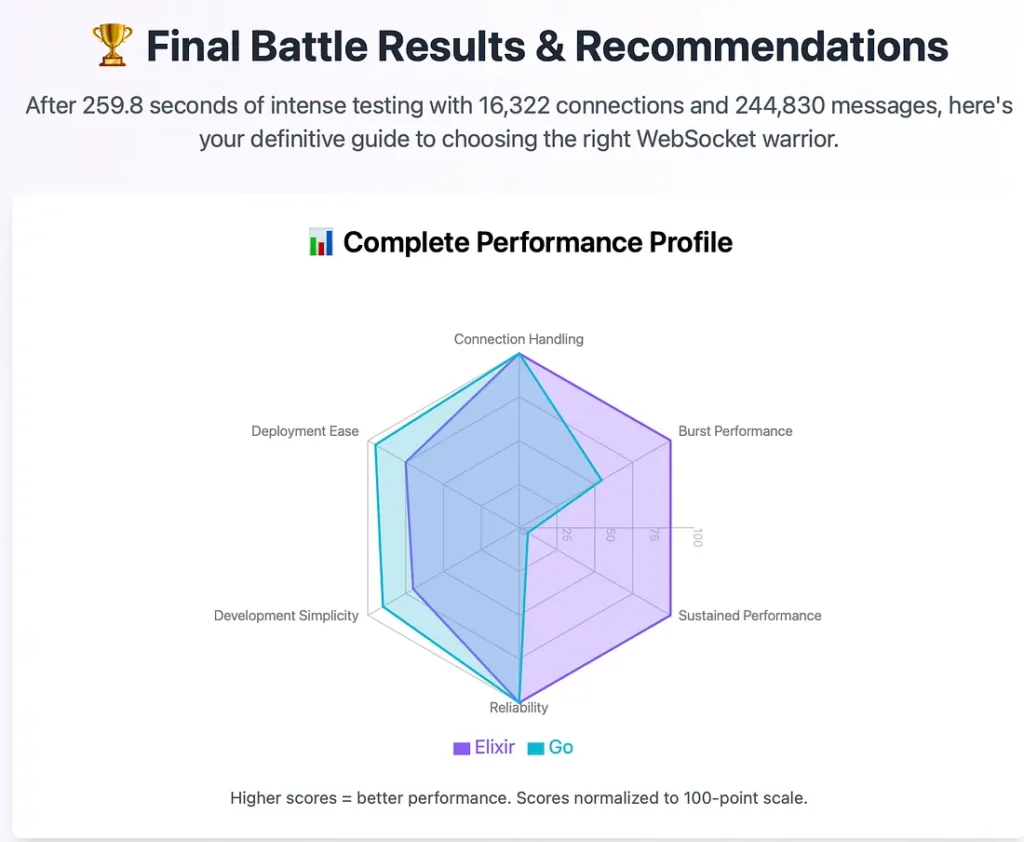

The Final Verdict

Both platforms showed perfect reliability, with 100% message success rates and zero errors in all phases. This is a testament to the robustness of both languages.

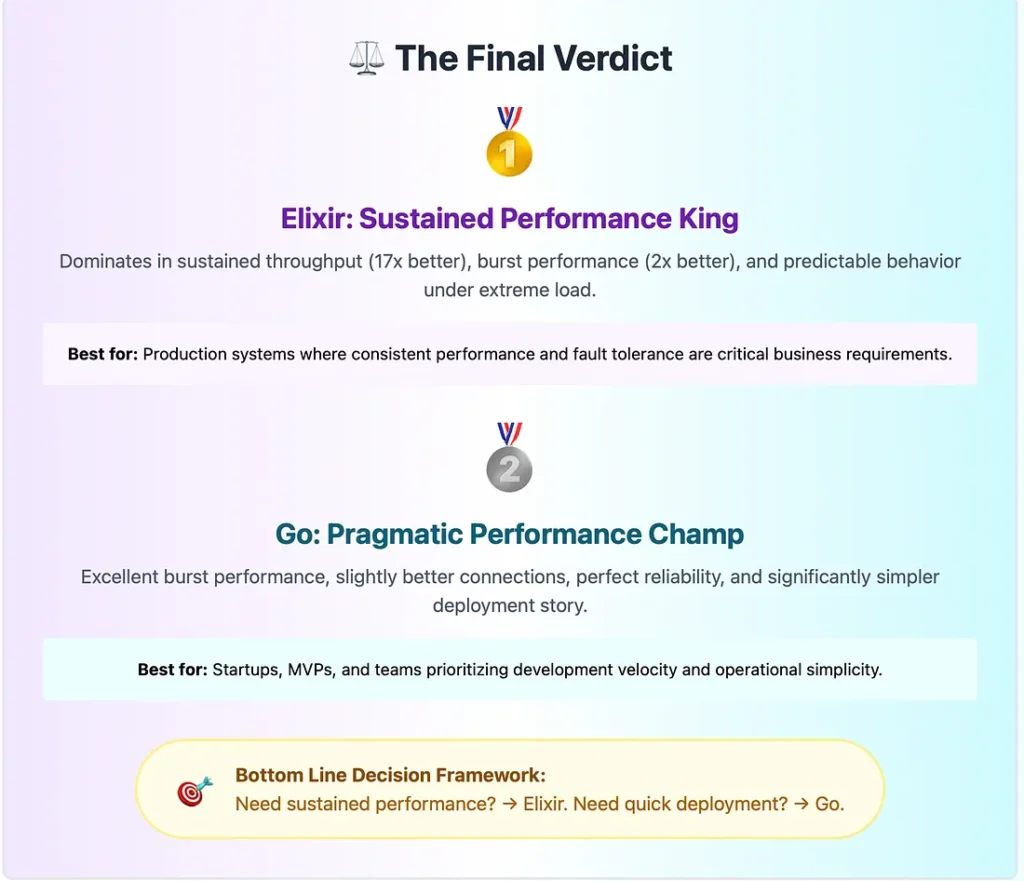

So, who won? It depends entirely on your use case.

The Bottom Line: Who Wins for Your Use Case?

Choose Elixir/BEAM When:

- High-concurrency messaging is your primary use case.

- Sustained performance and predictability under load are critical.

- You need built-in fault tolerance and “let-it-crash” reliability.

Choose Go When:

- Burst performance is more important than sustained.

- A lower memory footprint is crucial.

- Your team’s expertise is primarily in Go, or you need a single, easy-to-deploy binary.

This comparison highlights why fair benchmarking matters. Both platforms can handle substantial WebSocket workloads reliably. The key is understanding which architecture best fits your application’s unique performance requirements.

What’s your experience with WebSocket performance in production? Have you seen different results with other optimisations? I’d love to hear your thoughts and experiences in the comments!

I’m a backend engineer working in high-performance Elixir applications. While I’m still learning Go optimisation techniques, I believe in fair, transparent performance comparisons that help developers make informed technology choices.

Next finding here Episode 2

If you liked the blog, consider buying a coffee 😝 https://buymeacoffee.com/y316nitka